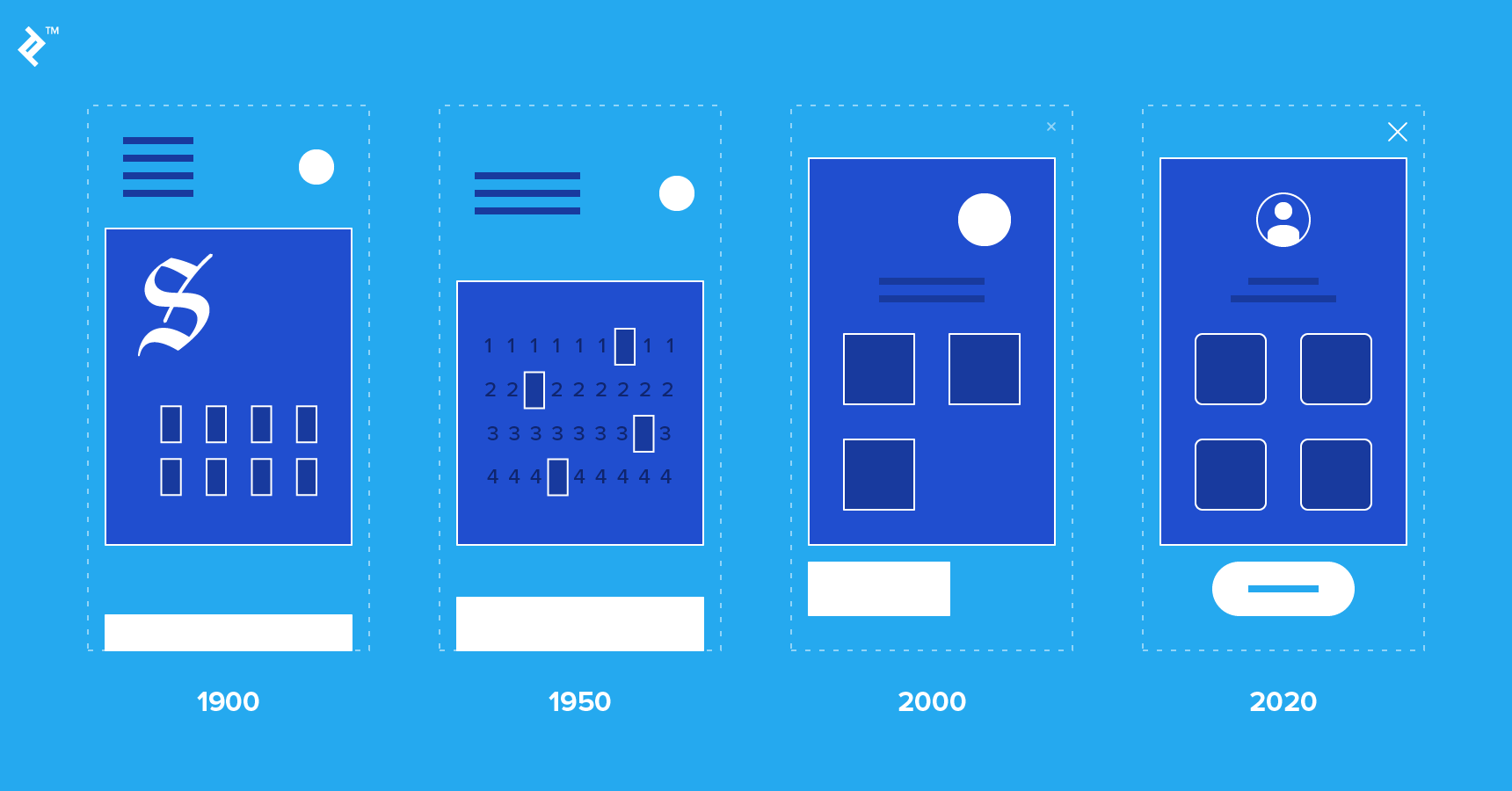

User interfaces (UI) are how we interact with digital devices, and their evolution has been a fascinating journey. From the early days of physical buttons and knobs to the touchscreens, voice commands, and gesture recognition of today, UIs have transformed the way we engage with technology. In this article, we’ll explore the evolution of user interfaces, their impact on technology, and what the future might hold.

Physical Buttons and Knobs

The earliest user interfaces relied heavily on physical buttons and knobs. These tactile interfaces were used in devices ranging from typewriters to early computers. Users interacted with these devices by physically pressing buttons or turning knobs to input commands or data. The development of keyboards, mice, and game controllers expanded the possibilities for interacting with computers and gaming consoles.

Physical buttons and knobs offered a straightforward and tactile way to input information, but they had limitations in terms of complexity and versatility. Additionally, their maintenance and durability could be issues over time.

Graphical User Interfaces (GUIs)

The introduction of graphical user interfaces (GUIs) marked a significant leap forward in user interaction. GUIs replaced text-based interfaces with visual elements like icons, windows, and menus. The Xerox Star, released in 1981, is considered one of the first commercial computers to feature a GUI. However, it was the Apple Macintosh in 1984 that popularized GUIs for a broader audience.

GUIs made computers more accessible to the general public. The use of a mouse to point and click on graphical elements allowed users to navigate and interact with computers in a more intuitive manner. Microsoft Windows and the various iterations of the Mac OS further refined GUIs and established them as the standard interface for personal computers.

Touchscreens and Mobile Devices

The introduction of touchscreens brought a revolutionary change to user interfaces. Devices like the iPhone, released in 2007, made touch-based interactions mainstream. Touchscreens enabled users to directly interact with content on the screen, eliminating the need for external peripherals like mice or keyboards.

The success of mobile devices, coupled with advances in touch technology, led to the proliferation of touchscreen interfaces across various products, from smartphones and tablets to ATMs and kiosks. Multi-touch gestures, pinch-to-zoom, and swipe actions became common ways to interact with digital content.

Voice Recognition and Virtual Assistants

Voice recognition technology, often powered by artificial intelligence, has gained prominence as a user interface. Virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant allow users to perform tasks and access information by speaking to their devices. Voice commands can be used for various purposes, from setting reminders and controlling smart home devices to searching the web and making calls.

Voice recognition has found applications beyond virtual assistants, including voice-controlled car infotainment systems, transcription services, and accessibility tools for individuals with disabilities. This form of user interface offers a hands-free and convenient way to interact with technology.

Gesture Recognition and Motion Control

Gesture recognition and motion control interfaces have gained traction in gaming and other applications. Devices like the Microsoft Kinect and the PlayStation Move use cameras and sensors to track users’ body movements and gestures. This technology has enabled immersive gaming experiences and has also been used for applications like virtual reality (VR) and augmented reality (AR).

In the realm of smartphones, gesture-based navigation systems have emerged. Users can navigate their devices by making specific gestures, reducing their reliance on physical buttons. For example, swiping up from the bottom of the screen can replace the traditional home button.

Virtual Reality (VR) and Augmented Reality (AR)

Virtual reality (VR) and augmented reality (AR) have ushered in new ways of interacting with digital environments. VR immerses users in a fully digital world, while AR overlays digital information onto the real world. These technologies often employ hand controllers, haptic feedback, and head tracking to provide a highly interactive and immersive experience.

VR and AR have applications in gaming, education, training, and simulations, as well as in fields like healthcare, where AR can assist in surgeries or remote consultations.

The Future: Beyond Screens

The future of user interfaces is likely to extend beyond traditional screens and physical controls. Several exciting developments are on the horizon:

Wearable Technology: Wearable devices like smartwatches and AR glasses are redefining how we interact with technology. They offer glanceable information and gesture-based controls.

Brain-Computer Interfaces (BCIs): BCIs are a burgeoning field that allows direct communication between the brain and digital devices. Users can control technology using their thoughts, opening up new possibilities for individuals with disabilities and beyond.

Haptic Feedback: Advances in haptic feedback technology aim to replicate the sense of touch in digital experiences, making it possible to “feel” and interact with virtual objects.

Neural Interfaces: Research into neural interfaces is exploring the direct connection between the brain and computers, potentially enabling users to control devices with their thoughts.

Ubiquitous Computing: The concept of ubiquitous computing envisions a world where technology seamlessly integrates into our environment, allowing us to interact with it naturally and intuitively.

Challenges and Considerations

The evolution of user interfaces comes with its set of challenges:

Accessibility: It’s essential to ensure that new interfaces are accessible to all users, including those with disabilities.

Privacy and Security: As interfaces become more interconnected and reliant on personal data, issues related to privacy and security must be addressed.

Learning Curve: The rapid development of interfaces can pose a learning curve for users who need to adapt to new interaction methods.

Ethical Considerations: The ethical use of emerging technologies, such as BCIs and neural interfaces, must be carefully considered to prevent misuse and protect individuals’ rights.

Conclusion

The evolution of user interfaces has been a dynamic and transformative journey. From physical buttons and GUIs to touchscreens, voice recognition, and gesture control, the way we interact with technology continues to evolve. The future promises even more immersive and natural interfaces that extend beyond screens and into our daily lives. As technology advances, it’s crucial to consider accessibility, privacy, and ethical considerations to ensure that these new interfaces benefit and empower users while respecting their rights and well-being. User interfaces will continue to shape the way we engage with technology, providing us with exciting and innovative possibilities.

Leave a Reply

You must be logged in to post a comment.